Winding Time

2019.05 | Individual Work

Interactive Installation | Generated Arts | Personal Time

Exhibition

Exhibited in ITP Spring Show 2019.

Toolkit

Software:

Javascript, Java, C

Machine Learning (PoseNet)

WebSocket

Processing

Arduino

MadMapper

Hardware:

Arduino Nano

Wind-up Motor

Laser-cut Acrylic

Credits

Solo work.

Winding Time is an experimental interactive installation that investigates personal time. Inspired by Einstein’s theory of relativity, Winding Time conveys the idea of how people’s relative speed affects their personal timeline and how people’s movement influence their time spread in a particular space.

People think time as a measurement, because of its absoluteness. Consequently, we let time decides our life; we encounter endless deadlines; we are busy fighting with time. I can’t stop thinking about why people have so many struggles with the notion that human created.

While, about time, Einstein’s theory of relativity has given us a new perspective to consider time as a changing element around us, it’s not only dynamic but also personal. People have their personal timelines because of their relative speed to others and different interactions within the space.

Inspired by Einstein’s theory of relativity, I tried to visualize personal time based on personal moving speed and express a small part of the theory artistically and hope to open up a new perspective for people to think about time.

These are a series of final results. Each image is a pair of two individuals’ timelines.

Those timelines interestingly show how your body trace spread differently to others in the same time duration and the same particular space. Also, how your body stretched and which part is stretched is distinct because of personal speed.

Winding Time invites multiple people to wind up a clockwork motor, and while it is releasing, the stretched personal timeline will be generated gradually according to the recent past with timestamps. People will be able to observe their past timeline and also could compare theirs to others’.

Technical Details

I use a webcam to capture images and always keep the last 1000 frames. When people wind up the clockwork motor, the installation will process a certain number of frames depending on how much the motor is winded up. The algorithm to process images is based on slit scan and using PoseNet machine learning module to get the speed of people, and based on the speed in those processed frames, scan speed will change accordingly. The processed images will be gradually generated over time and projected within the space.

Physical Interaction Design

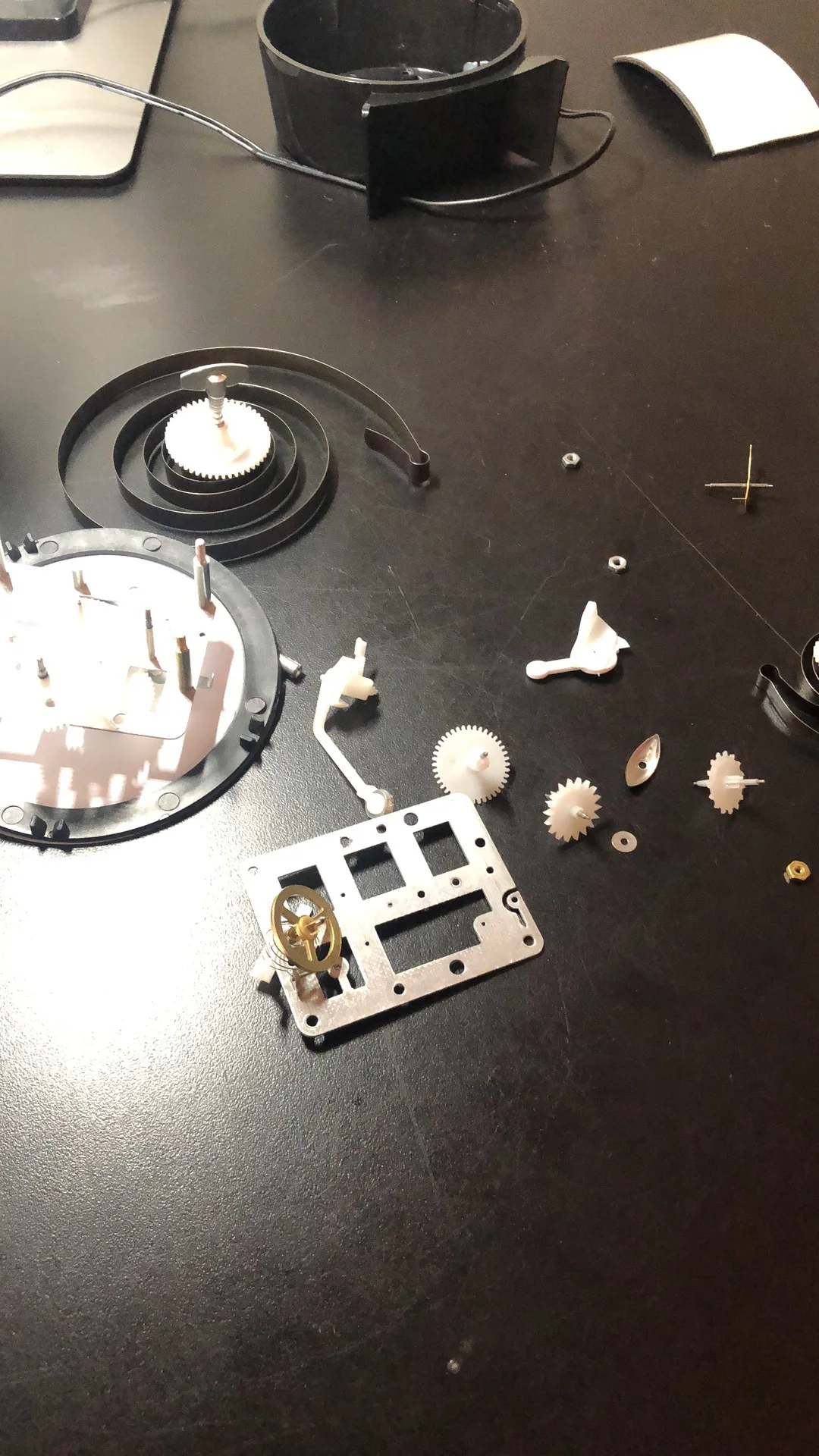

The reason of choosing this kind classic old school motor is :

It’s a classic clockwork motor that is tight to time.

Its sound is specific and intriguing.

When it releases, it opens up a sense of some movements are happening.

I tried different kind wind-up motor from wind-up toys and clocks to test different prototypes.

This is the first working prototype of the physical interaction, using an encoder sense how the motor work and send back data to computer via a Arduino Nano.

Digital Processing Algorithm

How to visualize time?

While researching and experimenting with different ways for visualizing time in 2D, I found slit scan to be an effective and artistic way of doing that.

I played with scan noise, scan direction , scan speed, found that every single experiment could convey a different feelings about time. And scan vertically from bottom to the top is the best way to convey my idea.

There are some quick prototype I did. All of those images are processed based on videos.

Slit scan successfully records people’s movement into their timeline and with different movement, it will generate different stretched body trace.

But how could I add one more factor: personal speed into it and how could I generate different timeline depending on speed in the context of relativity?

Use PoseNet machine learning module to capture how many people in front of my installation and their movement;

Use the captured data to calculate every personal speed;

Map it to scan speed;

Generate different stretched timeline.

Specifically if personal speed is over some threshold, I make scan speed change, and the generating image would start stretching, that’s how personal speed affect the final timeline.

In this demo, there are two red lines in those 2 upper videos show the scan position, and the generated timeline is keeping generating, the left side timeline is based on the left person’s moving speed, and the right side is based on the other. When Left person start moving, the red line stops, which causes generating stretched body trace in the left side timeline. same as the right side. This shows how people with different speed in the same video generate two different timelines.

Documentation / Show Pictures

Full Documentation

Next Step

For the next step, I’ll keep exploring the concept of personal time, and take other possible factors into it.

During the show, people keep saying that wind up motion and the sound are super satisfying, so I would like to make more wind up objects and make a series of installations that every wind-up motor would take a different factor to change the final personal timeline.